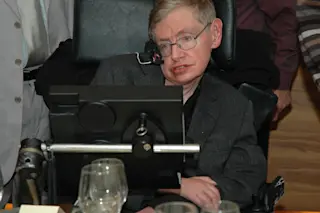

Stephen Hawking attends a dinner at Tel Aviv University. (Credit: The World in HDR/Shutterstock) Throat cancer, stroke and paralysis can rob people’s voices and strip away their ability to speak. Now, researchers have developed a decoder that translates brain activity into a synthetic voice. The new technology is a significant step toward restoring lost speech. “We want to create technologies that can reproduce speech directly from human brain activity,” Edward Chang, a neurosurgeon at the University of California San Francisco, who led the new research, said in a press briefing. “This study provides a proof of principle that this is possible.”

People who have lost the ability to speak currently rely on brain-computer interfaces or devices that track eye or head movements to communicate. The late physicist Stephen Hawking, for example, used his cheek muscle to control a cursor that would slowly spell out words. These technologies move a cursor ...