The term “deepfake” has penetrated the 21st-century vernacular, mainly in relation to videos that convincingly replace the likeness of one person with that of another. These often insert celebrities into pornography, or depict world leaders saying things they never actually said.

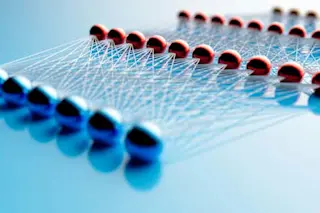

But anyone with the know-how can also use similar artificial intelligence strategies to fabricate satellite images, a practice known as “deepfake geography.” Researchers caution that such misuse could prompt new channels of disinformation, and even threaten national security.

A recent study led by researchers at the University of Washington is likely the first to investigate how these doctored photos can be created and eventually detected. This is unlike traditional photoshopping, but something much more sophisticated, says lead author and geographer Bo Zhao. “The approach is totally different,” he says. “It makes the image more realistic,” and therefore more troublesome.

Geographic manipulation is nothing new, the researchers note. In fact, ...