(Credit: iStockphoto)

iStockphoto

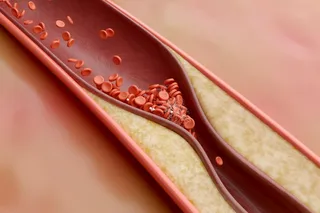

Phil Brewer thought he knew exactly what to do when the ambulance crew wheeled a well-dressed man in his late sixties into the emergency department. What he didn’t know: He was about to be involved in a series of events that would kill his patient. Brewer, then an assistant professor of emergency medicine at Yale School of Medicine, had been alerted by the crew that the man, Sanders Tenant (a pseudonym), had suddenly begun to talk gibberish while dining out with his family. Then his right arm and leg had gone weak.

Brewer suspected an acute stroke, but first he had to rule out conditions that can masquerade as a stroke, such as low blood sugar, a seizure, a brain tumor, and migraine headache. He had only minutes to make the correct diagnosis. Then the gathering medical team would decide whether to use a new stroke treatment ...