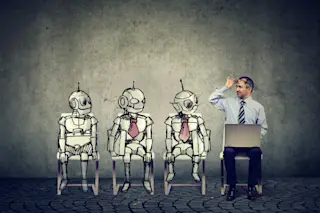

“Who are you?” I ask.

“Cortana,” replies the cheerful female voice coming out of my phone. “I’m your personal assistant.”

“Tell me about yourself,” I say to the Microsoft AI.

“Well, in my spare time I enjoy studying the marvels of life. And Zumba.”

“Where do you come from?”

“I was made by minds across the planet.”

That’s a dodge, but I let it pass. “How old are you?”

“Well, my birthday is April 2, 2014, so I’m really a spring chicken. Except I’m not a chicken.”

Almost unwillingly, I smile. So this is technology today: An object comes to life. It speaks, sharing its origin story, artistic preferences and corny jokes. It asserts its selfhood by using the first-person pronoun “I.” When Cortana lets us know that she is a discrete being with her own unique personality, it’s hard to tell whether we have stepped into the future or ...