When Josh Bongard’s creatures come to life —he must first turn them on—it is obvious that they know nothing about the world. They thrash and fling themselves around, discovering not that they have bodies but that bodies exist, not that they can move but that motion is possible. Gradually they grow more certain—more conscious, you might say. As they do, you sense, coming from somewhere deep inside, a note of triumph like a toddler’s first step.

Bongard’s babies are among the many early signs that our relationship with machines is on the verge of a seismic shift. Ever since the idea of artificial beings first appeared thousands of years ago, the question has been not so much what they might do for us—which services or functions they might bring to the table—but how we would relate to entities that are, and yet are not, human. There has never been a consensus. Frankenstein’s monster was a tragedy, while Pygmalion and Galatea found happiness together.

Humanoid robots previously held sway only in fiction, but scientists say such machines may soon move among us, serving as hospital orderlies and security guards, caring for our elderly, even standing in as objects of friendship or sexual and parental love. As we embark upon this new era, it is worth asking whether such robots might ever seem truly emotional or empathetic. Could they be engineered to show loyalty or to get angry?

Bongard, a roboticist at the University of Vermont, thinks the answer could be yes, and adds that we might respond in kind. Emotional relations with robots “are definitely a prospect in the near future,” he says. “You already see it with children, who empathize with their toys. Many of us have emotional relationships with our pets. So why not robots as well?”

Machines smart enough to do anything for us will probably also be able to do anything with us: go to dinner, own property, compete for sexual partners. They might have passionate opinions about politics or, like the robots on Battlestar Galactica, even religious beliefs. Some have worried about robot rebellions, but with so many tort lawyers around to apply the brakes, the bigger question is this: Will humanoid machines enrich our social lives, or will they be a new kind of television, destroying our relationships with real humans? The only given is that the day we learn the answer draws closer all the time.

Until now, of course, robots have been relatively crude machines, each assigned a single repetitive task—not much different from a washing machine or a lathe. Typically they have been disembodied arms or automated forklifts. Their original engineers rarely saw a reason for function or design to be tied to biological analogues; planes don’t have feathered wings, after all.

Recent research suggests that this is going to change. As it turns out, the more versatile a machine, the more the machine will have to look and behave like us. With our legs we can pivot, jump, kick, tiptoe, run, slog, climb, swim, and stop instantly. Our five-fingered hands, with their opposable thumbs and soft, compliant tissues, can hold and control an immense variety of object geometries. Our body is so flexible that we can recruit almost any muscle to the cause of manipulating the world.

To make the transition—to climb our stairs, handle our tools, and navigate our world—future robots will probably have approximately our height and weight, our hands and feet, our gait and posture and rhythms. Soft, flexible bodies could be a precondition for machines required to operate within complex, dynamic, confined spaces, such as squeezing through crowds.

One obvious domestic use for such humanoids, caring for our aging baby boomers, will require a machine that makes the beds, does the shopping, cooks the meals, and gives medicines and baths. The appetite of the military for machines that can navigate the chaos of the battlefield is without bounds. Every sector of society would love machines that could repair and maintain themselves forever —machines that, like us, could learn from experience, adjust to changing circumstances, and, if needed, evolve. And purely from a business point of view, you do not need to be Alan Greenspan to understand that the more things a machine can do, the bigger the market.

Even if our species looked like something else altogether, we might still end up building humanoid robots to serve us because the benefit would be so great. Today dozens of labs around the world are working on humanlike hands, legs, torsos, and the like, not because the researchers have read too much science fiction but because this approach represents the best solution to real problems. Their efforts to build humanoid machines vary, but they all bring something to the table; in the end, it is by aggregating the best of these efforts that the new robot generation will emerge.

Wisdom of the body

Perhaps the most interesting reason to design robots in our own image is a new theory of intelligence now catching on among researchers in mechanical engineering and cognitive psychology. Until recently the consensus across many fields, from psychology to artificial intelligence (AI), was that control of the body was centralized in the brain. In the context of robotics, this meant that sensory systems would send data up to a central computer (the robot brain), and the computer would grind away to calculate the right commands. Those commands (much like nerve signals) would then be distributed to motors—acting as the robot’s musculature—and the robot, so directed, would move. This model, first defined decades ago when the very first computers were being built, got its authority from our concept of the brain as the center of thought.

As time went on, however, it became apparent that central control required an almost endless amount of programming, essentially limiting what robots could do. The limits became clearer with deeper understanding of how living organisms work: not through commands from some kind of centralized mission control, but via a distributed interaction with their environment.

“The traditional robotics model has the body following the brain, but in nature the brain follows the body,” Fumiya Iida, of MIT’s Computer Science and Artificial Intelligence Laboratory, explains. Decisions flow from the properties of the materials our bodies are made of and their interactions with the environment. When we pick up an object, we are able to hold it not primarily because of what our brain says but because our soft hands mold themselves around the object automatically, increasing surface contact and therefore frictional adhesion. When a cockroach encounters an irregular surface, it does not appeal to its brain to tell it what to do next; instead, its musculoskeletal system is designed so that local impacts drive its legs to the right position to take the next step.

Domo is a new upper-torso humanoid robot at the MIT CSAIL Humanoid Robotics Lab. | Photo Donna Coveney/MIT

The biologist who discovered this last fact, Joseph Spagna, currently at the University of Illinois, teamed up with engineers at the University of California at Berkeley to build a robot inspired by nature. The result, named RHex (for its six legs), is a robot that can traverse varied terrain without any central processing at all. At first it had a lot of trouble moving across wire mesh with large, gaping holes. Spagna’s team made some simple, biologically inspired changes to the legs of the robot. Without altering the control algorithms, they simply added some spines and changed the orientation of the robot’s feet, both of which increased physical contact between the robot and the mesh. That was all it took to generate the intelligence required for the device to move ahead. In a related project, Iida and his MIT group are now building legs that operate with as few controlled joints and motors as possible, an engineering technique they call underactuation.

The theory that much of what we call intelligence is generated from the bottom up—that is, by the body—is now winning converts everywhere. (The unofficial motto of Iida’s group is “From Locomotion to Cognition.”) Some extreme adherents to this point of view, called embodiment theory, speculate that even the highest cognitive functions, including thought, do no more than regulate streams of intelligence rising from the body, much as the sound coming from a radio is modulated by turning the knobs. Embodiment theory suggests that much wisdom is indeed “wisdom of the body,” just as those irritating New Age gurus say.

Starfish turns

Bongard and his babies are part of this revolution. Like many roboticists, he is interested in the art of navigating across different terrains, from sand to rock to grass to swamp. Almost by definition, a versatile machine will require this skill; a housecleaning robot might have to climb stairs or ladders, crawl up walls, and wax a floor, one after the other. The old approach would have been to design a central computer that recognized new terrains and geometries, with the characteristics in a database that informed the robot’s gait one hurdle at a time. But this would have failed, because there are too many variables to program in. “Programming a general-purpose machine to anticipate all eventualities is a classic problem in traditional artificial intelligence,” Bongard says. “It has never been solved. Embodiment suggests a different approach.”

Here’s how it works in one of Bongard’s devices, a black, four-legged starfish about the size of a dinner plate. Switched on, the starfish starts to flop about, all the while recording how its body behaves and generating ideas about how its parts might work in the world—how joint a might influence joint b given c units of force, and so on. It then compares the actual behavior of its body with the generated models to see which one made the best predictions, taking the winning model and seeding it into the action in each round. Using something like genetic recombination, the starfish parses and reparses the models, becoming steadily smarter over time. After some specified length of time, the smartest model is chosen as the operating officer—the piece that tells the robot what to do. This event is like the robot’s bar mitzvah, the point at which it is ready to go to work. Now when it receives a task, it hands it to the winning model. The model figures out which joints and motors should do what, distributes those instructions to the parts, and off the robot goes. As it works the robot keeps track of its own performance. Whenever performance falls off, programmers instruct the robot to evolve a new model that might work better.

“Embodiment allows us to avoid building in our human biases,” Bongard says. “It lets the robot figure out itself what is appropriate for its own body.”

My robot, my self

Embodiment theory speaks to one of the open questions about robots: the nature of our relationships with them. Many versatile machines will work as members of collaborations, including mixed teams of humans and other machines. Perhaps the most obvious such application is soldier robots, but any useful domestic robot would obviously have to interact with its owner.

It is only common sense that the easier it is for these entities to understand each other, the more effective the team will be. If intelligence, including social intelligence, flows up from the body, it follows that making sure all members have approximately the same kind of body will enhance their ability to relate.

Suppose, for instance, that Robot Bob is struggling to walk on ice. If Robot Alice has learned how to walk on ice herself, her memory of how her body behaved in similar situations will help her recognize the nature of Bob’s problem. Once she understands the situation, she should be able to help Bob by transmitting the right algorithm to him on the spot, or even by offering him a hand. If robots are going to learn by imitation, one of the most powerful learning mechanisms we know, it will clearly be useful for our bodies to be alike.

Even verbal communication will be easier if our machines look like us. “Imagine you use the phrase ‘bend over backward’ with a robot,” Bongard says. “How is the robot supposed to know that this is a metaphor for difficulty? A programmer could write it in ahead of time, but he or she would be working forever. There are just too many phrases like that. But if the robot has a body like ours, if it has a back, then it might be able to understand on its own, automatically.”

Far more is involved in communication than just speech. Candace Sidner, an artificial intelligence expert working for military contractor BAE Systems, is one of many researchers interested in machines capable of understanding and participating in gestural communication. These nonverbal behaviors include eye contact, body movement, shifts in posture, and hand gestures. Sidner’s special interest is engagement, the component of gesturing used to structure the back-and-forth aspect of communication. Her current goal: building a machine that can participate in conversations with two humans at once, figuring out when the humans want to speak to each other so it knows when to fall silent and when to speak up.

Yet it is physical similarity, in the end, that may allow humans to respond to robots in kind. “Once you have a face at all,” Sidner says, “people expect to see certain kinds of information in that face. If they don’t, it seems weird, and that sense adds to the cognitive load of dealing with the robot.”

The history of computer graphics provides an object lesson: Once a representation gets halfway realistic, the market demands increasing levels of fidelity. Stopping short of perfection plunges the user into what is sometimes called “the uncanny valley,” the point at which the lack of realism gets distracting. This played out in the animated movie

the animation was almost totally realistic except for the characters’ eyes, a distraction remarked upon by almost every critic who weighed in.

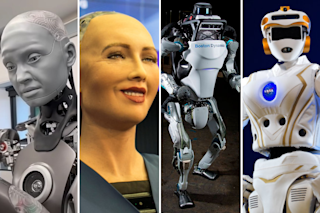

The most difficult barrier to maintaining an emotional relationship with a robot could be the question of sincerity. Does this entity really care about me as a person, or is it just manipulating me to make me think it does? Efforts to clear the sincerity hurdle are just getting off the ground. Take the simplest question: How realistic—how humanlike—will robots have to be to be accepted as sincere? Nobody is sure, and roboticists are spreading their bets across a range of possibilities. Bandit II at the University of Southern California has a simple, cartoonlike face with only rudimentary feature mobility. MIT’s Leonardo has 32 facial features, corresponding to 32 mechanical muscle groups, and looks like a baby animal with big, mobile eyes and floppy, expressive ears. Kokoro’s Actroid DER has a very high level of realism, including arm and torso gesturing.

You often need to see the expression on a person’s face to know how to take what he or she is saying, as we all know from our struggles with faceless e-mail. But we might not need perfect realism to initiate real relationships. Bryce Huebner, an experimental philosopher at the University of North Carolina at Chapel Hill, has found his human subjects are willing to accept the possibility that machines have “beliefs” and even feel pain, but only if those machines have a humanoid face. Huebner suspects that “if people are willing to accept attributions of pain, it might not be hard for them to accept that machines have other feelings, like hoping, wishing, dreaming, or fearing.” Of course realism, no matter how good, won’t be enough. Human beings look pretty realistic, and relationships between them go south all the time. The first International Conference on Human-Robot Personal Relationships is being held this summer in the Netherlands. It will surely not be the last.

Robot love

If humans come to accept emotional relationships with machines, it will add a new layer of complexity to our lives. Some experts see these new bonds as essentially nonthreatening. “I believe that humans will have individualized relationships with robots, but I would not go so far as to propose that they will supplant human relationships,” says Rod Grupen, a roboticist who works on human-robot interaction and communication at the University of Massachusetts at Amherst. “There are lots of species on the planet that do not challenge the relationships we have with other humans,” he adds.

Indeed, psychologists like Sherry Turkle of MIT say robots will never replace other people because they lack a foundation in the common human life cycle. “We are born of mothers, we had fathers, we mature, we make decisions about generativity, we think about a next generation, we face our mortality,” Turkle says. “All of these imply a set of complex relationships charged with anxiety, joy, guilt, and passion. As human beings we are bound by our life trajectory.” In other words, lack of a common biology would impede the deepest of relationships between humans and machines.

Others predict true intimacy. David Levy, president of the International Computer Games Association, analyzes the hardest case of all in his book Love and Sex With Robots. His core point is that there is nothing that psychology knows about human relationships of any kind, including sexual ones, that precludes similar relationships with robots. Indeed, he argues, there is nothing that one can specify about the rewards of human relations that rules out robots’ delivering more of those rewards, whatever they are. Are you interested in a partner that knows a lot about sports? Easy to imagine a robot that can fit the bill. Or would you prefer one that knows nothing about sports but is eager to learn? Easy to imagine that, too.

Levy agrees there will always be something to keep humans interested in one another, but he does not name that “something,” and it is nowhere in his book. Perhaps it is just a pious wish. Whatever else Love and Sex With Robots does, it makes one wonder whether the human species might drive itself to extinction through dalliance with sex robots that unflinchingly fulfill every last erotic wish.

It might prove difficult to stay off that road. Embodiment theory suggests that if we want machines to perform at the highest levels, we will need to give them access to our lives and our world. The robot next door might be the heir to a fortune. It might drive a racy red convertible or be your chess partner or your friend.

Many of us will surely find all this creepy and foreboding, but better functionality has its own logic. At the very least the development of humanoid robots will transform one of our more characteristic drives—the need to know who and what we are at the core.

“At bottom, robotics is about us,” Grupen says. “It is the discipline of emulating our lives, of wondering how we work.” Still, as all engineers know, you never really understand something until you have built it; and if you can build it and it works as designed, you can be confident that you know something basic. Spagna’s cockroaches and Bongard’s robotic starfish are baby steps in that direction, with far more progress to come.

Whatever robotics does to the species, for better or worse, once robots take a human form, the old narratives about the mystery of our nature are likely to be transformed to their roots. We may have to learn how to live with understanding ourselves.