Portraits by Patrick Harbron

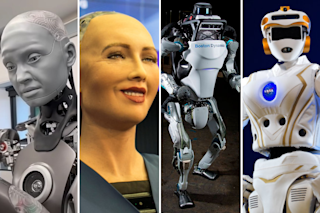

When it comes to having a robot around the house, humans don't seem willing to settle for the simple solution: a mechanized box on wheels. Instead, we appear to be obsessed with having the device resemble us as much as possible. And not just in looks but in the way it talks, walks, thinks, and feels. Just how far should we take this? How human should a humanoid robot be? Recently, Discover, in partnership with The Disney Institute, assembled an extraordinary panel of robotics researchers to grapple with the issue.

Charles Petit

Moderator Senior writer at U.S. News & World Report. Petit, who previously wrote for the San Francisco Chronicle, is a former president of the National Association of Science Writers. He has a degree in astronomy from the University of California at Berkeley.

Eric C. Haseltine

Senior vice president of research and development at Walt ...