In recent years, the clamor to fight climate change has triggered revolutionary action in numerous areas. Renewable electricity generation now accounts for 30 percent of the global supply, according to the International Energy Authority. The same organization reports that sales of electric cars grew by 40 percent in 2020. While the U.S. recently committed to halving greenhouse gas emissions by 2030.

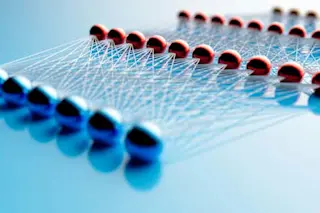

Now the same drive for change has begun to permeate the scientific world. One area of concern is the energy and carbon emissions generated by the process of computation. In particular, the growing interest in machine learning is forcing researchers to consider the emissions produced by the energy-hungry number-crunching required to train these machines.

At issue is an important question: How can the carbon emissions from this number-crunching be reduced?

Now we have an answer thanks to the work of David Patterson at the University of California, Berkeley, with ...