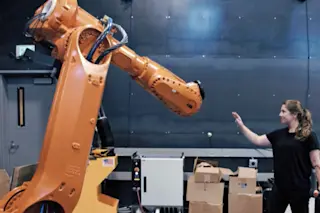

(Credit: Madeline Gannon/Instructables) You don't need to be Tony Stark to have a robot assistant anymore. During a fellowship at Autodesk’s Pier 9 workshop, Madeline Gannon, a Ph.D. candidate at Carnegie Mellon University, combined motion capture technology with a robotic arm to create an interactive system that reads human motions and responds to them accordingly. In the video below, watch a robotic arm observe and follow Gannon, turning and flexing to match her movements like a charmed snake.

The robot “sees” with a Vicon motion camera system paired with reflective wearable markers that tell the robot where to look. Quipt, the control software Gannon developed, acts as an interpreter, translating human motions into instructions for the robot. The actions themselves come from Robo.Op, an open-source library created by Gannon that contains movement commands. Quipt also includes an Android app that provides a continuous readout of its commands so controllers can ...