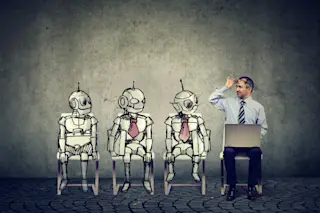

In the “Star Trek: The Next Generation” episode “The Measure of a Man,” Data, an android crew member of the Enterprise, is to be dismantled for research purposes unless Captain Picard can argue that Data deserves the same rights as a human being. Naturally the question arises: What is the basis upon which something has rights? What gives an entity moral standing?

The philosopher Peter Singer argues that creatures that can feel pain or suffer have a claim to moral standing. He argues that nonhuman animals have moral standing, since they can feel pain and suffer. Limiting it to people would be a form of speciesism, something akin to racism and sexism.

Without endorsing Singer’s line of reasoning, we might wonder if it can be extended further to an android robot like Data. It would require that Data can either feel pain or suffer. And how you answer that depends ...