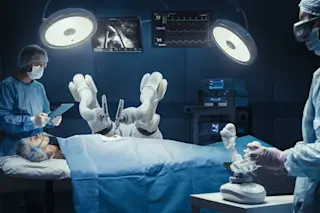

Oh, artificial intelligence, how quickly you grow up. Just three months ago you were learning to walk, and we watched you take your first, flailing steps. Today, you’re out there kicking a soccer ball around and wrestling. Where does the time go? Indeed, for the past few months we’ve stood by like proud parents and watched AI reach heartwarming little milestones. In July, you’ll recall, Google’s artificial intelligence company in the United Kingdom, DeepMind, developed an algorithm that learned how to walk on its own. Researchers built a basic function into their algorithms that only rewarded the AI for making forward progress. By seeking to maximize the reward, complex behaviors like walking and avoiding obstacles emerged. This month, researchers at OpenAI, a non-profit research organization, used a similar approach to teach AI to sumo wrestle, kick a soccer ball and tackle. Their AI consisted of two humanoid agents that were ...

First AI Learned to Walk, Now It's Wrestling, Playing Soccer

Explore artificial intelligence milestones as AI learns to walk and sumo wrestle, showcasing competitive self-play in action.

More on Discover

Stay Curious

SubscribeTo The Magazine

Save up to 40% off the cover price when you subscribe to Discover magazine.

Subscribe