Predicting the future position of objects comes natural for humans, but it is quite difficult for a computer. (Credit: Shutterstock) In many ways, the human brain is still the best computer around. For one, it’s highly efficient. Our largest supercomputers require millions of watts, enough to power a small town, but the human brain uses approximately the same energy as a 20-watt bulb. While teenagers may seem to take forever to learn what their parents regard as basic life skills, humans and other animals are also capable of learning very quickly. Most of all, the brain is truly great at sorting through torrents of data to find the relevant information to act on. At an early age, humans can reliably perform feats such as distinguishing an ostrich from a school bus, for instance – an achievement that seems simple, but illustrates the kind a task that even our most powerful ...

Computers Learn to Imagine the Future

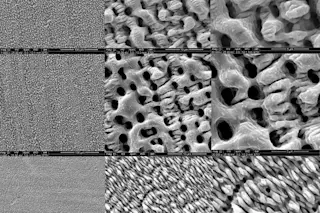

Explore how the sparse prediction machine at Los Alamos revolutionizes neuromimetic computing, simulating human-like predictions.

More on Discover

Stay Curious

SubscribeTo The Magazine

Save up to 40% off the cover price when you subscribe to Discover magazine.

Subscribe