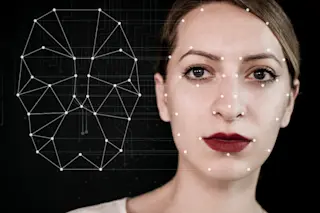

Waiting in a sluggish line to speak with a cranky border agent may soon be a thing of the past: Imagine gaining entry to another country within 15 seconds, no human interaction nor physical documents required. This hypothetical situation already exists with the Smart Tunnel, which uses facial and iris recognition technology to verify passengers’ identities via 80 cameras, and processes the data via artificial intelligence. Dubai International Airport piloted the Smart Tunnel in 2018 — the world’s first technology of its kind.

While it may not always appear ripped from a sci-fi movie, you’re likely to have already undergone some sort of biometric screening process in U.S. airports. After the 9/11 attacks, the Department of Homeland Security (DHS) and its interior agencies ramped up security measures to confirm travelers’ identities and snuff out terrorism. In 2004, U.S. airports began screening the faces and fingers of passengers flying into the ...