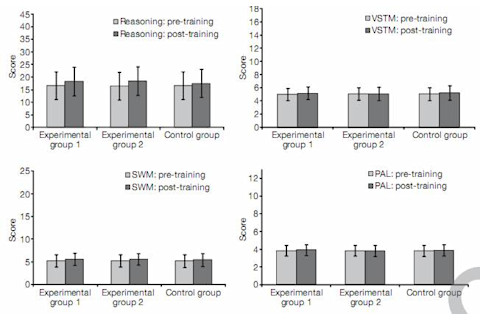

You don’t have to look very far to find a multi-million pound industry supported by the scantiest of scientific evidence. Take “brain-training”, for example. This fledgling market purports to improve the brain’s abilities through the medium of number problems, Sudoku, anagrams and the like. The idea seems plausible and it has certainly made bestsellers out of games like Dr Kawashima’s Brain Training and Big Brain Academy. But a new study by Adrian Owen from Cambridge University casts doubt on the claims that these games can boost general mental abilities. Owen recruited 11,430 volunteers through a popular science programme on the BBC called “Bang Goes the Theory”. He asked them to play several online games intended to improve an individual skill, be it reasoning, memory, planning, attention or spatial awareness. After six weeks, with each player training their brains on the games several times per week, Owen found that the games improved performance in the specific task, but not in any others. That may seem like a victory but it’s a very shallow one. You would naturally expect people who repeatedly practice the same types of tests to eventually become whizzes at them. Indeed, previous studies have found that such improvements do happen. But becoming the Yoda of Sudoku doesn’t necessarily translate into better all-round mental agility and that’s exactly the sort of boost that the brain-training industry purports to provide. According to Owen’s research, it fails. All of his recruits sat through a quartet of “benchmarking” tests to assess their overall mental skills before the experiment began. The recruits were then split into three groups who spent the next six weeks doing different brain-training tests on the BBC Lab UK website, for at least 10 minutes a day, three times a week. For any UK readers, the results of this study will be shown on BBC One tomorrow night (21 April) on Can You Train Your Brain?The first group faced tasks that taxed their reasoning, planning and problem-solving abilities. The second group’s tasks focused on short-term memory, attention, visual and spatial abilities and maths (a set that were closest in scope to those found in common brain-training games). Finally, the third group didn’t have any specific tasks; instead, their job was to search the internet for the answers to a set of obscure questions, a habit that should be all too familiar to readers of this blog. In each case, the tasks became more difficult as the volunteers improved, so that they presented a constantly shifting challenge. After their trials, all of the volunteers redid the four benchmarking tests. If their six weeks of training had improved their general mental abilities, their scores in these tests should have gone up. They did, but the rises were unspectacular to say the least. The effects were tiny and the third group who merely browsed for online information “improved” just as much as those who did the brain-training exercises (click here for rawdatatables).

By contrast, all of the recruits showed far greater improvements on the tasks they were actually trained in. They could have just become better through repetition or they could have developed new strategies. Either way, their improvements didn’t transfer to the benchmarking tests, even when those were very similar to the training tasks. For example, the first group were well practised at reasoning tasks, but they didn’t do any better at the benchmarking test that involved reasoning skills. Instead, it was the second group, whose training regimen didn’t explicitly involve any reasoning practice, who ended up doing better in this area. Owen chose the four benchmarking tests because they’ve been widely used in previous studies and they are very sensitive. People achieve noticeably different scores after even slight degrees of brain damage or low doses of brain-stimulating drugs. If the brain-training tests were improving the volunteers’ abilities, the tests should have reflected these improvements. You could argue that the recruits weren’t trained enough to make much progress, but Owen didn’t find that the number of training sessions affected the benchmarking test scores (even though it did correlate with their training task scores). Consider this – one of the memory tasks was designed to train volunteers to remember larger strings of numbers. At the rate they were going, they would have taken four years of training to remember just one extra digit! You could also argue that the third group who “trained” by searching the internet were also using a wide variety of skills. Comparing the others against this group might mask the effects of brain training. However, the first and second groups did show improvements in the specific skills they trained in; they just didn’t become generally sharper. And Owen says that the effects in all three groups were so small that even if the control group had sat around doing nothing, the brain-training effects still would have looked feeble by comparison. These results are pretty damning for the brain-training industry. As Owen neatly puts, “Six weeks of regular computerized brain training confers no greater benefit than simply answering general knowledge questions using the internet.” Is this the death knell for brain training? Not quite. Last year, Susanne Jaeggi from the University of Michigan found that a training programmecould improve overall fluid intelligence if it focused on improving working memory – our ability to hold and manipulate information in a mental notepad, such as adding prices on a bill. People who practiced this task did better at tests that had nothing to do with the training task itself. So some studies have certainly produced the across-the-board improvements that Owen failed to find. An obvious next step would be to try and identify the differences between the tasks used in the two studies and why one succeeded where the other failed. Reference: Nature http://dx.doi.org/10.1038/nature09042