Daniel Kish has been blind since he was 13 months old, but you wouldn’t be able to tell. He navigates crowded streets on his bike, camps out in the wilderness, swims, dances and does other activities many would think impossible for a blind person. How does he do it? Kish is a human echolocator, a real life Daredevil.

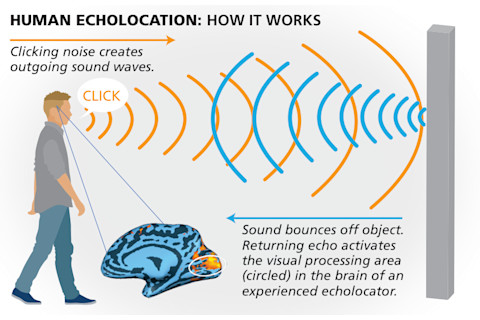

Using a technique similar to what bats and dolphins use, human echo-locators navigate using audio cues given off by reflective surfaces in the environment. Few people know that this same technique can work for human beings. But as a matter of fact, echolocation comes quite naturally to people like Kish, who are deprived of visual information. “I don’t remember learning this,” he says. “My earliest memories were of detecting things and noting what they might have reminded me of and then going to investigate.”

Kish was born with bilateral retinoblastomas, tiny cancers of the retina, which is part of the eye responsible for sensing visual information. Tumors form early in this type of cancer, so aggressive treatment is necessary to ensure they don’t metastasize to the rest of the body.

Unfortunately, the tumors cannot be separated from the retina. Laser treatments are performed to kill them off, followed by chemotherapy. The result is that the retina is destroyed along with the cancer, meaning patients often are left completely blind. Kish lost his first eye at 7 months and the other at 13 months. He has no memory of having eyesight. His earliest vivid memory is from when he was very young, maybe 2½. He climbed out his bedroom window and walked over to a chain-link fence in his backyard. He stood over it, angled his head upward and clicked over it with his tongue, listening for the echo. He could tell there were things on the other side. Curious as to what they were, he climbed over the fence and spent much of his night investigating.

Like Kish, Ben Underwood was a self-taught echolocator and was also diagnosed with bilateral retinoblastomas, in his case at the age of 2. After many failed attempts to save his vision by treating the tumors with radiation and chemotherapy, his mother made the difficult decision to remove her son’s right eye and left retina. This left Ben completely blind.

A couple of years later, when Ben was in the back seat of the car with the window down, he suddenly said, “Mom, do you see that tall building there?” Shocked by his statement, his mother responded, “I see the building, but do you see it?”

It turned out Ben had picked up on the differences in sounds coming from empty space versus a tall building. When Ben was in school, he started clicking with his tongue. At first it was an idle habit, but then he realized he could use the skill to detect the approximate shape, location and size of objects. Soon, Ben was riding a bicycle, skateboarding, playing video games, walking to school and doing virtually anything else an ordinary boy his age could do. He never used a guide dog, a white cane or his hands. Very sadly, Ben passed away in 2009 after the cancer that claimed his eyes returned.

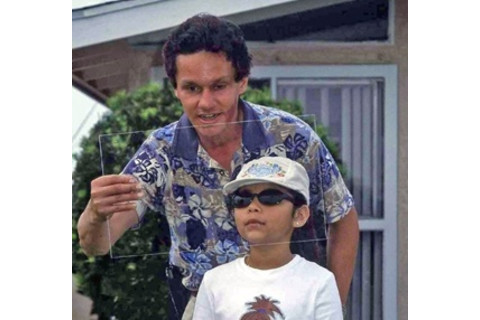

Left, Daniel Kish takes the lead on a tandem bike ride. Right, Ben Underwood, shown in 2006, plays basketball with a friend.

Experiencing Echolocation

For centuries, researchers have been trying to find out how blind people compensate for their loss of vision. It was clear that some blind people occasionally were able to “hear” objects that were apparently making no sounds. But no one knew exactly how blind people did this. And although bat echolocation was documented in 1938, scientists didn’t become seriously interested in the phenomenon until the early years of the Cold War, when military funding made the research feasible. It turns out human echolocation is akin to active sonar and the kind of echolocation used by dolphins and bats, but less fine-grained. While bats can locate objects as small as flies, human echolocators report that objects must be much larger — about the size of a water glass — for them to be locatable.

Philosophers and neuroscientists often talk about phenomenology, or what it’s like to have an experience. If we show you a red ball and ask you about its color, assuming you’re not colorblind, it should be easy for you to answer “red.” However, if we ask you to describe what it’s like to see the color red, you’d have a much harder time answering. By their very nature, questions about phenomenology can be nearly impossible to answer, making it hard to discover exactly what it’s like to experience echolocation.

Indeed, Kish says that, because he has been blind for as long as he can remember, he has nothing to compare his experience to. He can’t really say whether his experience is like seeing. However, he says he definitely has spatial imagery, which has the properties of depth and dimension. Research indicates that the imagery of echolocation is constructed by the same neurology that processes visual data in sighted people. The information isn’t traveling down the optic pathway — the connection from the eyes to the brain — but it ends up in the same place. And some individuals who have gone blind later in life describe the experience as visual, in terms of flashes, shadows or bright experiences. It seems possible that echolocators have visual imagery that is similar to that of sighted people.

Ben’s case provides some evidence of this, as he consistently reported seeing the objects he could detect, not just hearing them. And while self-reports are notoriously unreliable, there is other evidence that Ben really could see with his ears.

Ben had prosthetic eyes that replaced his real ones, but since his eye muscles were still intact, the prosthesis moved in different directions, much like real eyes. In the documentary Extraordinary People: The Boy Who Sees Without Eyes, it’s clear Ben’s prosthetic eyes were making saccades in several situations that require focusing quickly on different objects in the peripheral field. Saccades are the quick, coordinated movements of both eyes to a focal point. The documentary never discussed Ben’s saccadic eye movements, but there’s no doubt they occurred and that they matched the auditory stimuli he received. For example, in one scene, he was playing a video game that required destroying objects entering the scene. Although echolocation didn’t give him the ability to “see” the images on a flat screen, Ben could play games based on the sound effects played through the television’s speakers. Like many blind people, Ben used the sound cues to figure out where objects were on the screen. His saccadic eye movements corresponded to the changes in location of the virtual objects.

The primary role of saccadic eye movements is to guarantee high resolution in vision. We can see with high resolution only when images from the visual field fall on the retina’s central region, called the fovea. When images fall on the more peripheral areas of the retina, we don’t see them very clearly. Only a small fraction of an entire scene falls on the fovea at any given time. But rapid eye movements can ensure that you look at many parts of an entire scene in high resolution. Although it’s not immediately apparent, the brain creates a persisting picture based on many individual snapshots.

Many other factors govern eye movements, including changes in and beliefs about the environment, and intended action. For example, your eyes move in the direction of a sudden noise. A belief that someone is hiding in the tree in front of you makes your eyes seek out the tree. And intending to climb a tree triggers your eyes to switch from the ground to the tree so you can inspect it properly.

Even when recalling visual imagery and there is no sensory input or external environment to process, saccadic eye movements still occur. This happens because when the brain stores information about the environment, it stores information about eye movements along with it. When your brain generates a visual image, it’s likely a composite of different snapshots of reality, and rapid eye movements help keep the visual image organized and in focus. This dual storage mechanism explains Ben’s saccadic eye movements: The sound stimuli from Ben’s environment triggered his brain to generate spatial imagery matching the sound stimuli, and his saccadic eye movements helped keep the image organized and in focus.

Seeing with Your Ears

Sighted people often use a simple form of echolocation, too, perhaps without even realizing it. When you’re hanging a picture on a wall, one way to locate a stud within it is to knock around and listen for changes in pitch. But when you tap on a hollow space in the wall, you usually don’t hear an actual echo — yet you can tell somehow that the space sounds hollow.

Research shows we can perceive these types of stimuli subconsciously. When we do hear echoes, it’s from sound bouncing back off distant objects. When you click your tongue or whistle toward a nearby object, though, the echo returns so fast that it overlaps the original sounds, making it hard to hear an echo. But the brain unconsciously interprets the combination of the sound thrown in one direction and the returning sound as an alteration in pitch. What makes Ben and Kish so remarkable is that they can use what everyone’s brain unconsciously detects in an active way to navigate the world. And although Ben and Kish may seem superhuman because of their perceptual abilities, research confirms that sighted humans can acquire echolocation, too. After all, the visual cortex does process some sounds, particularly when the brain seeks to match auditory and visual sensory inputs.

American psychologist Winthrop Niles Kellogg began his human-echolocation research program around the time of the Cuban Missile Crisis. His research showed that both blind and sighted subjects wearing blindfolds could learn to detect objects in the environment through sound, and a study by another researcher showed that, with some training, both blind and sighted individuals can precisely determine certain properties of objects, such as distance, size, shape, substance and relative motion from sound alone. While sighted individuals show some ability to echolocate, Kellogg showed that blind echolocators seem to operate a bit differently when collecting sensory data. They move their heads in different directions when spatially mapping an environment, while sighted subjects don’t move their heads when given the same types of tasks.

Interestingly, when sighted individuals are deprived of visual sensory information for an extended period of time, they naturally start echolocating, possibly after only a few hours of being blindfolded. What’s more, with their newfound echolocation skills comes some visual imagery. After a week of being blindfolded, the imagery becomes more vivid. One of Kellogg’s research participants said he experienced “ornate buildings of whitish green marble and cartoon-like figures.”

Is sound alone responsible for echolocators’ ability to navigate the environment? Researchers wonder whether touch cues, such as the way air moves around objects, can offer information about the surroundings. Philip Worchel and Karl Dallenbach from the University of Texas at Austin sought to answer these questions in the 1940s. Their experiments involved asking both blind and blindfolded sighted participants to walk toward a board placed at varying distances. Participants were rewarded for learning to detect the board by not walking into it face-first. After multiple trials, both the blindfolded and blind subjects became better able to detect the obstacle. After about 30 trials, blindfolded subjects were as successful at stopping in front of the boards as blind subjects when they were wearing hard-soled shoes. But this ability disappeared when subjects performed the same experiments on carpet or while wearing socks, which muffled the sound their footsteps created. The researchers concluded that the subjects relied on sound emanating from their shoes, implying sound is responsible for navigational ability.

The increased ability to navigate via sound appears to be the result of sound processing in the brain, not merely increased acuity of hearing. One study showed that the blind and the sighted scored similarly on normal hearing tests. But when a recording had echoes, parts of the brain associated with visual perception in sighted people activated in echolocators but not in sighted people. These results showed how echolocators extracted information from sound that wasn’t available to the sighted controls.

Some reports seem to indicate that humans can’t perceive objects that are very close — within 2 meters of them — through echolocation. But a 1962 study by Kellogg at Florida State University showed that blind people can detect obstacles at much shorter distances — 30 to 120 centimeters. Some participants were accurate even within 10 centimeters, suggesting that although subjects aren’t consciously aware of the echo, they still can respond appropriately to echo stimuli.

The above cases show that our perceptual experiences involve a lot more than just what we’re consciously aware of. Our brain is primed to accomplish the seemingly superhuman, even at the basic level of perception. It’s an extraordinary organ that creates our rich experiences by turning waves that merely strike the eardrum into complicated, phenomenal representations of our surroundings.

Teaching the Blind to See

Perhaps what is most amazing about echolocation is that it can be taught. Kish wrote his developmental psychology master’s thesis on the subject, developing the first systematic approach to teaching the skill. Now, through his organization, World Access for the Blind, he strives to give the blind nearly the same freedom as sighted people by teaching blind people to navigate with their ears.

Kish teaches a young girl how to echolocate with a process he calls systematic stimulus differentiation. By listening to clicks bouncing off the Plexiglass surface, students learn how to discern an object from its background.

So why don’t all blind people echolocate? The problem, says Kish, is that our society has fostered restricting training regimens. One example of this restriction is the traditional cane training method.

The military developed the cane techniques more than 60 years ago for blind veterans, people used to living in restricted circumstances. For them, it was easy to adapt to the regimented system. But now, the majority of blind people, including children and the elderly, learn this system.

Kish’s training curriculum differs from tradition by taking an immersive approach intended to activate environmental awareness. It’s a tough-love approach with very little hand-holding. He encourages children to explore their home environment for themselves and discourages family members from interfering unless the child otherwise could be harmed.

Kish then changes the cane his students use during walking. The student holds the cane out in front, elbow slightly bent, so the hand is roughly at waist height. With every step, the cane tip lands about where the student’s foot will land so the cane clears the area of where the child is about to step. The student repeatedly taps the cane from left to right, called “two-point touch.” Although computer models support this method, Kish thinks the movement is unnatural. “We are not robots,” he says. “The reality is that the biomechanics do not sustain the kind of regimented movement you have to have in order for that to work — you lose fluidity of motion. You don’t have to be a physical therapist to know that’s a recipe for a wrist problem.”

Then the real fun begins: The students learn to echolocate by systematic stimulus differentiation. Notice the term detection isn’t included. No stimulus really occurs in a vacuum, so the process is not so much detection as it is distinguishing one stimulus from another and its background. The process follows a standard learning structure: Students first learn to differentiate among strong, obvious stimuli and then advance to weaker, less obvious stimuli. Kish establishes a “hook” stimulus by using a plain panel he moves around in the student’s environment. Students don’t need to know what they’re listening for, since the stimulus is selected to be strong enough that it captures the brain’s attention. But the panel doesn’t work for everyone at first. In those cases, he uses a 5-gallon bucket or something else that produces a very distinct sound quality. Once the brain is hooked on the characteristic stimulus, he starts manipulating its features to make the effect subtler.

The next set of exercises helps students learn how to determine what the objects actually are. This essentially involves three characteristics: where things are, how large they are and depth of structure, which refers to the geometric nature of the object or surface. Students answer questions such as, “Is the object coarse or smooth?” “Is it highly solid or sparse?” and “Is it highly reflective or absorbent?” Kish says all those patterns come back as acoustic imprints. The key is to notice the changes in the sound when it comes back from when it went out. With determined practice, students eventually learn how to differentiate among general environmental stimuli.

Although the organization’s instructors are currently all blind echolocators, Kish anticipates that sighted instructors could teach the skill as well. Several sighted instructors are in training, showing promise of using echolocation themselves. His goal is to have sighted instructors performing just as well as blind echolocators, though he emphasizes that although both sighted and blind individuals can echolocate, they may have profoundly different phenomenological experiences. In the meantime, Kish is hard at work, teaching the blind how to use their ears to see.

Excerpted from The Superhuman Mind: Free the Genius in Your Brain by Berit Brogaard, Ph.D. and Kristian Marlow, M.A., to be published Aug. 25, 2015, by Hudson Street Press, an imprint of Penguin Publishing Group, a division of Penguin Random House LLC. Copyright 2015 by Berit Brogaard and Kristian Marlow.