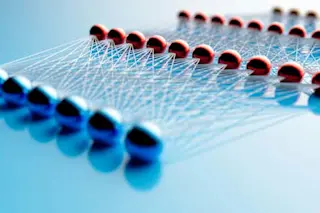

Back in 2019, a group of computer scientists performed a now-famous experiment with far-reaching consequences for artificial intelligence research. At the time, machine vision algorithms were becoming capable of recognizing a wide range of objects with some recording spectacular results in the standard tests used to assess their abilities.

But there was a problem with the method behind all these tests. Almost all the algorithms were trained on a database of labelled images, known as ImageNet. The database contained millions of images which had been carefully described in human-written text to help the machines learn. This effort was crucial for the development of machine vision and ImageNet became a kind of industry standard.

In this way, the computer scientists used a subset of the images to train algorithms to identify a strawberry, a table, a human face and so on, using labelled images in the dataset. They then used a ...