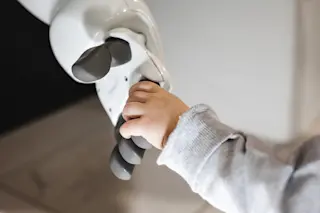

Back around the turn of the millennium, Susan Anderson was puzzling over a problem in ethics. Is there a way to rank competing moral obligations? The University of Connecticut philosophy professor posed the problem to her computer scientist spouse, Michael Anderson, figuring his algorithmic expertise might help.

At the time, he was reading about the making of the film 2001: A Space Odyssey, in which spaceship computer HAL 9000 tries to murder its human crewmates. “I realized that it was 2001,” he recalls, “and that capabilities like HAL’s were close.” If artificial intelligence was to be pursued responsibly, he reckoned that it would also need to solve moral dilemmas.

In the 16 years since, that conviction has become mainstream. Artificial intelligence now permeates everything from health care to warfare, and could soon make life-and-death decisions for self-driving cars. “Intelligent machines are absorbing the responsibilities we used to have, which is ...